I'm a bit tired of voicing my biases on certain things in the industry that grinds my gears so for now, let's go down a more mellow route and talk about how far we've come with 3D Rendering since the first Ray-Traced render made by J. Turner Whitted in 1979.

Yeah Yeah, that ray-tracing, not the kind that my modern generation prefers which is real-time raytracing which is used to generate almost life-like renderings that look alot like real-life.

For now, I've only got 1 game that uses RTX lighting and that is ALAN WAKE 2. But I also have the MATRIX Unreal demo in my PlayStation 5 so I'm familiar with real-time ray-tracing.

But right now, I'm not talking about the tech used for video games but rather about pre-rendered CGI and how far we've come since the first renders since then and even how far since people were able to do their own renders with their own Windows 9x/NT computers in the 90s.

Why did I single out the early wireframe and solid gouraud shading of the 70s?

Because I'm more impressed with how far pre-rendered ray-tracing has come since 1979.

Anyway,

A lot of CGI done back in the day dealt a lot of limitations that precluded any form of applicational use for imagery or film before Toy Story.As nice looking as some of these shorts were, they were made on hardware that was way out of reach for the average consumer.

A lot of the early CGI before the Mid-80s relied on hardware that could take up an entire room full of Cabinet sized Mainframe computers connected to a teletype or DEC Terminal.

The smallest that these machines came close to before 1985 that could do CGI were probably Refrigerator sized computers referred to as MINICOMPUTERS.

It wasn't until companies such as APOLLO and SILICON GRAPHICS revolutionized WORKSTATIONS: Computers that looked like Consumer Desktops but have twice the amount of computing than your typical IBM PC XT or Commodore could ever do.

But even then, many of these workstations are barely affordable for the average consumer. On top of that, I've mentioned that for many people who buy or build computers, the only thing they care about is playing Video Games and nothing else. That explains why they spend over $1000 for the best processor with the most cores and the most ammount of RAM and the best GPU out there with the best amount of VRAM, CUDA cores, and high clock count.

Workstations like SGI's own workstations aren't built for gaming. Their built for rendering, and they run their own proprietary UNIX operating system too.

But getting something like this SGI INDY above would have been a starting point for many a 3D artist in the Mid to late 90s since the INDY was fairly cheap at around the $5000 mark.That's still considerably high but I bet you many CG artists who started with their studies in the 90s got this machine through an educational discount.

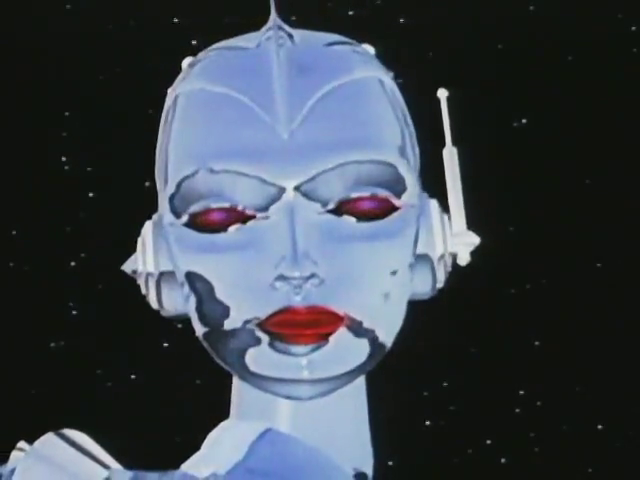

Here's some images pulled from a CGI Short film Collection Laserdisc:

Just imagine how long these would have taken to render on hardware of the day?

Compared to CGI rendered with today's x64 based architecture using tools like MAYA and BLENDER.

The RTX 4090 above is a ZOTAC AMP AIRO Extreme card but I don't have that card.

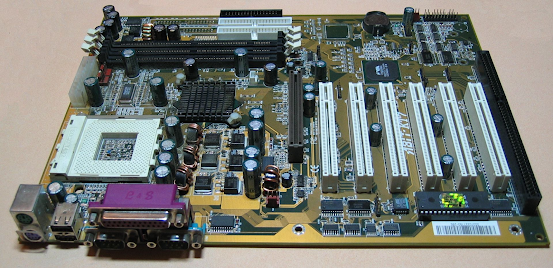

Currently, ADA LOVELACE is the best architecture to go for in GPUs for 3D rendering but beware if your card comes with this..... ok ok.. practically every RTX 40 series card comes with this:This is the dreaded 12VHPWR cable that comes with every RTX 40 series card. It's a hassle due to it's rather bulky and ungodly nature as well as those melting cable rumors I've been hearing about which is attributed to excited gamers who don't push these cables in all the way.

That's like a weight lifted off my shoulders with no issues. And this is in 4K resolution so it's quite a feet.

I know I'm off the cuff from my Calarts Style post and my DOUG posts but again, I want to go down a more mellow path.

No comments:

Post a Comment